What is BERT?

BERT stands for Bidirectional Encoder Representations from Transformers. It is a natural language processing update.

What does it intend to improve?

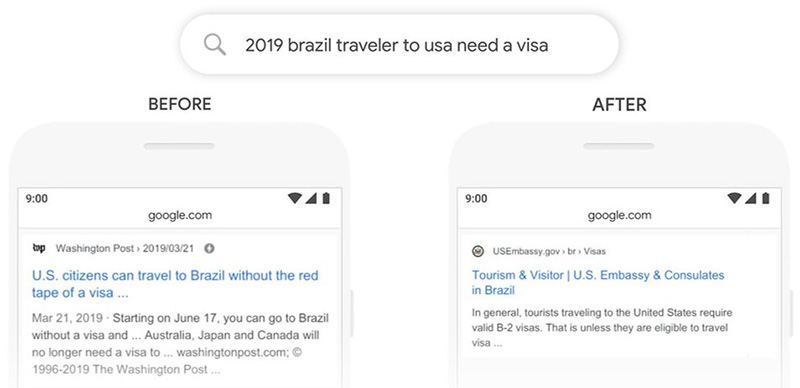

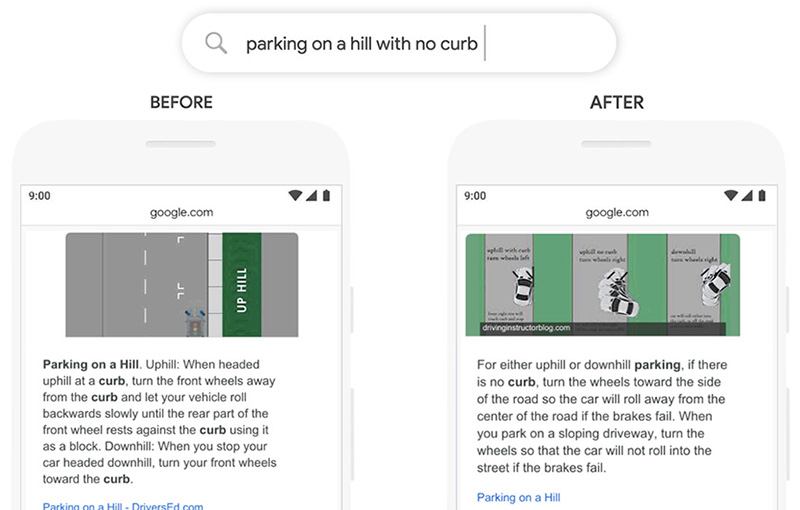

It is an AI algorithm update to help Google better understand natural colloquial language.

When will BERT be triggered?

Google can trigger BERT every time in combination with its other signals to understand and match a search query to return the best, most relevant set of results.

This new BERT signal will assist Google for both SEO and ads to understand the true intent and context behind a search and therefore improve relevancy immeasurably. Google is all about creating a better experience for its users and relevancy is something it prizes above all.

BERT should help both the advertiser and the user if it is effective. It is one step further for AI to understanding the nuances of human language. The importance of smaller connecting words and the relationship between them will strive to be understood by this update. Words like “to” and “or” that may have previously been overlooked are assessed for their contribution to the syntax of the query as a whole.

We do not foresee there to be any negatives from the update and are excited for any improvements to the accurate matching of search queries to keywords.

Get in touch if you want to learn more about BERT.